Matrox Imaging Matrox Design Assistant X Alternatives & Competitors

Ranked Nr. 24 of 85 Robot Software

Top 10 Matrox Design Assistant X Alternatives

- neurala Vision AI Software

- SOLOMON vision JustPick

- MVTec MERLIC

- SOLOMON vision Solmotion

- Matrox Imaging Matrox Imaging Library X

- Liebherr Group Robot vision technology packages - LHRobotics.Vision

- Microsoft Microsoft Robotics Developer Studio

- EasyODM.tech EasyODM Machine Vision Software

- PEKATVISION Software Bundled with Camera

neurala Vision AI Software

VisionImprove your visual quality inspection process

Neurala’s Vision Inspection Automation (VIA) software helps manufacturers improve their vision inspection and quality control processes to increase productivity, providing flexibility to scale to meet fluctuating demand. Easy to set up and integrate to existing hardware, Neurala VIA software reduces product defects while increasing inspection rates and preventing production downtime – all without requiring previous AI expertise.

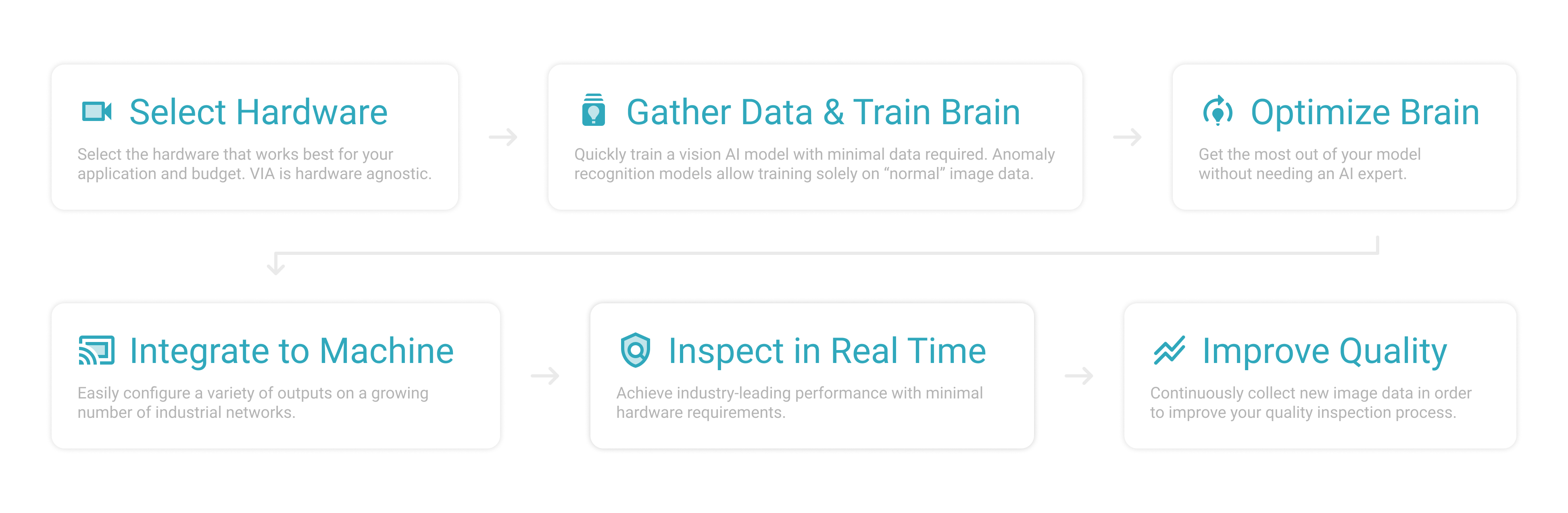

How Neurala’s Vision AI software works

.png)

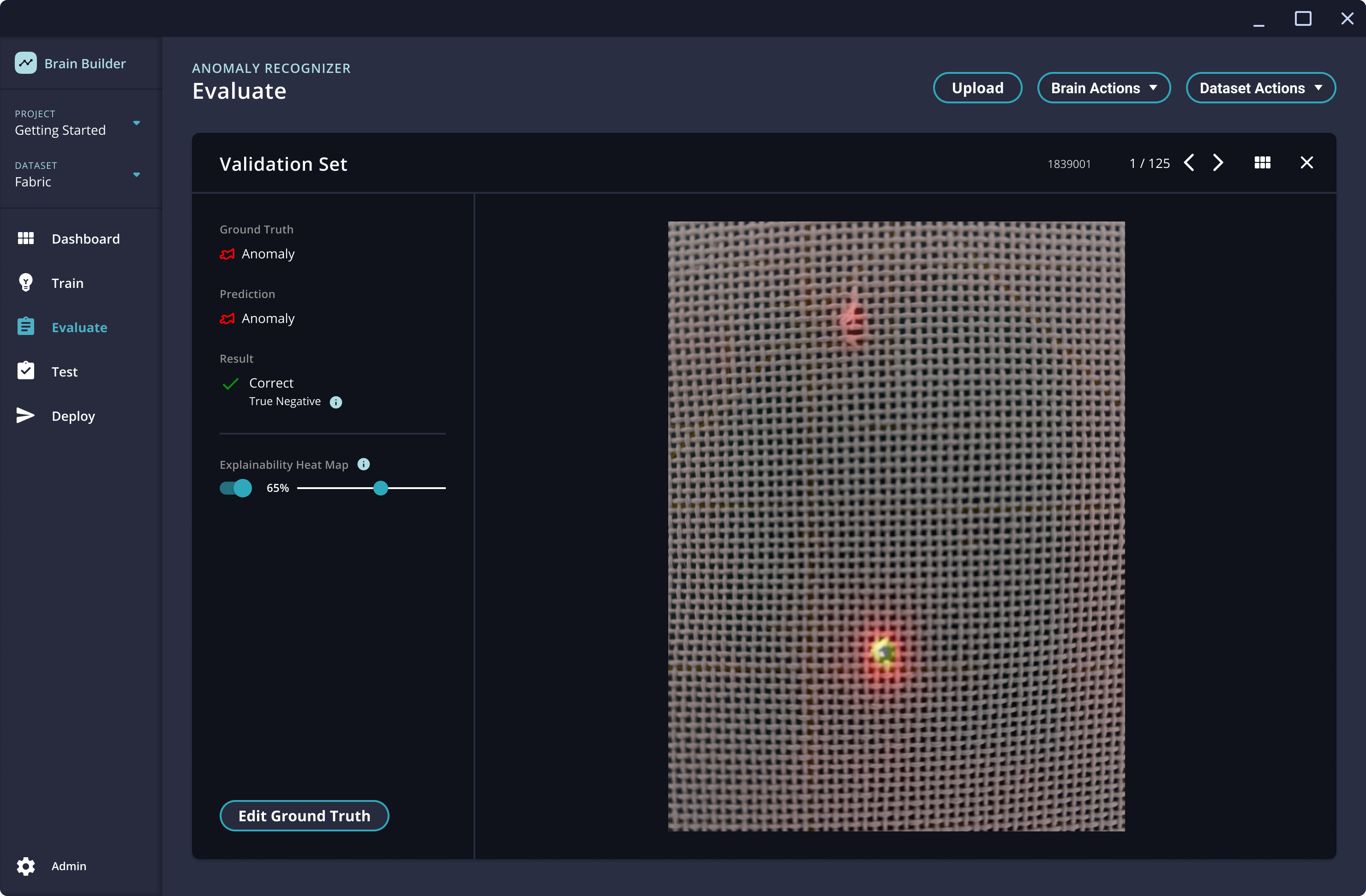

Understanding what your Vision AI is “seeing”

Neurala’s Explainability feature highlights the area of an image causing a vision AI model to make a specific decision about a defect. With this detailed understanding of the workings of the vision AI model, manufacturers can build better performing AI models that continuously improve processes and production efficiencies.

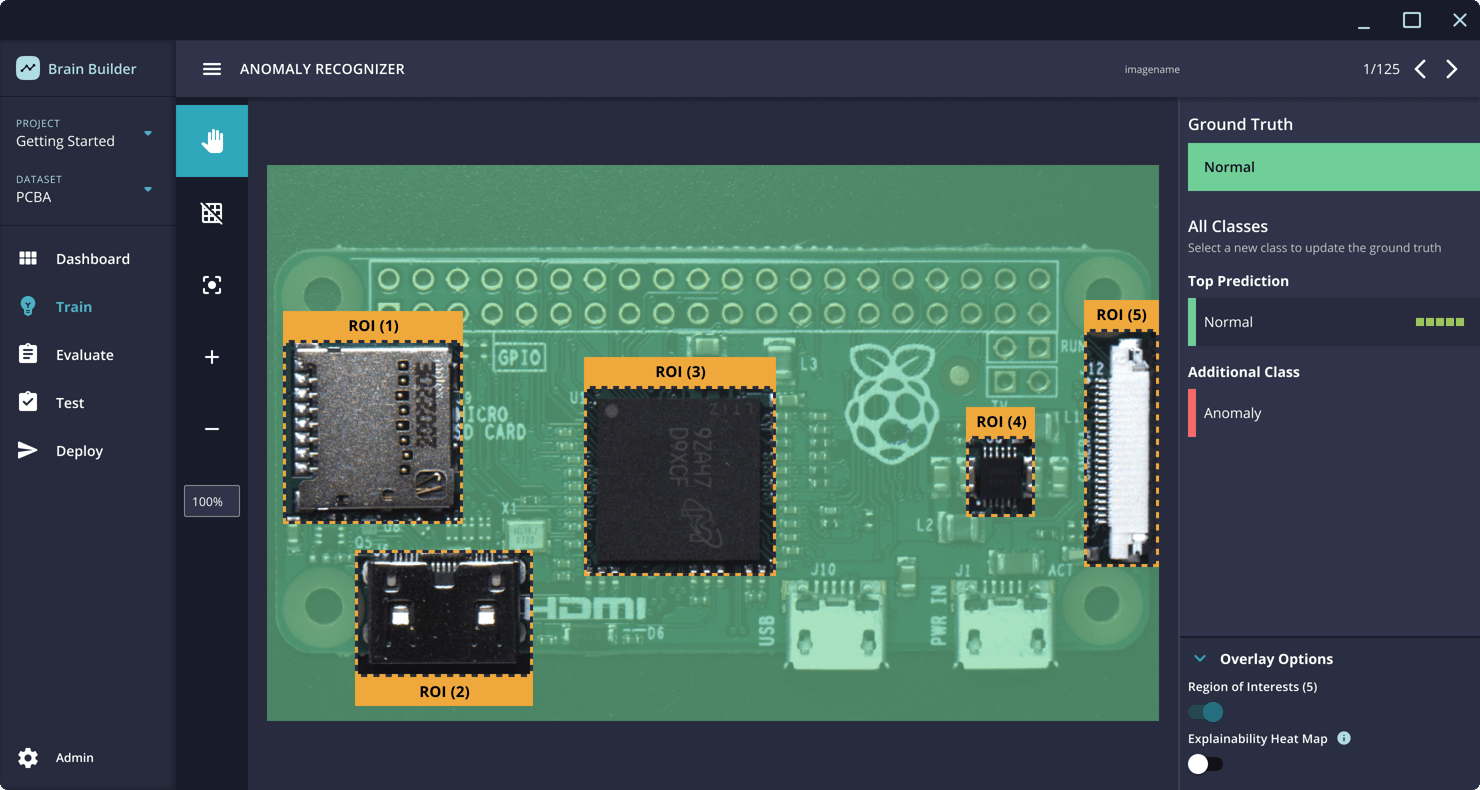

Multiple inspection points on complex products

Neurala's Multi-ROI (region of interest) feature helps ensure that all components of particular interest are in the right place and in the right orientation, without any defects. Multi-ROI can run from a single inspection camera, which dramatically reduces the cost per inspection, without slowing down the run time.

Innovation that’s fast, easy and affordable

Neurala VIA - Beyond machine vision

Defects that are easy for a human to see can be difficult for machine vision to detect. Adding vision AI dramatically increases your ability to detect challenging defect types such as surface anomalies and product variability. Neurala VIA helps improve inspection accuracy and the percentage of products being inspected. You can build data models in minutes, then easily modify and redeploy for changes on the production line.

SOLOMON vision JustPick

VisionAs e-commerce expands at phenomenal rates, handling shipment is proving to be a herculean task. Effective supply chains that process more and deliver in less time are critical to managing consumer expectations and capitalizing on this upward trend.

JustPick Key Advantages

AI-powered vision systems help robots ‘see’ so they can perform tasks for order fulfillment much more cost-efficiently. With JustPick, robots no longer need to be trained to recognize objects, allowing them to sort and handle large quantities of unknown SKUs on the fly.

Process large, random inventories without programming

Automated sorting to increase throughput

Reduced overhead costs & uninterrupted operations

Compatible with over 20 major robot brands

JustPick Key Features

Created for the e-commerce picking process, JustPick’s custom-built functions help robots identify and pick packages of different shapes and sizes autonomously.

‘Unknown’ picking

JustPick eliminates the need for robots to ‘know’ what objects are in order to pick, allowing large inventories to be processed without having to learn each SKU one by one.

Intelligent gripper

Upon locating an item, JustPick automatically configures the optimum gripping approach, and if needed, adjusts the number of active suction cups to ensure a secure grasp.

Easy integration

JustPick is compatible with over 20 robot brands, numerous PLCs, and 3D cameras including ToF, structured light, and stereo vision.

Faster set up

No programming is required to operate JustPick. Our user-friendly UI allows users to drag-and-drop preset function blocks to create their unique workflow.

MVTec MERLIC

VisionA NEW GENERATION OF MACHINE VISION SOFTWARE

MVTec MERLIC is an all-in-one software product for quickly building machine vision applications without any need for programming.

It is based on MVTec's extensive machine vision expertise and combines reliable, fast performance with ease of use. An image-centered user interface and intuitive interaction concepts like easyTouch provide an efficient workflow, which leads to time and cost savings.

MERLIC provides powerful tools to design and build complete machine vision applications with a graphical user interface, integrated PLC communication, and image acquisition based on industry standards. All standard machine vision tools such as calibration, measuring, counting, checking, reading, position determination, as well as 3D vision with height images are included in MVTec MERLIC.

MERLIC 5

MERLIC 5 introduces a new licensing model for greatest possible flexibility. It allows customers to choose the package – and price – that exactly fits the scope of their application. Depending on the required number of image sources and features (“add-ons”) for the application, the packages Small, Medium, Large and X-Large as well as a free trial version is available. This new "package" concept replaces the previous "editions" model.

With the release of MERLIC 5, MVTec’s state-of-the-art deep learning technologies are finding their way into the all-in-one machine vision software MERLIC. Easier than ever before, users can now harness the power of deep learning for their vision applications.

MERLIC 5 includes Anomaly Detection and Classification deep learning technologies:

The "Detect Anomalies" tool allows to perform all training and processing steps to detect anomalies directly in MERLIC.

The "Classify Image" tool enables using classifiers (e.g., trained with MVTec's Deep Learning Tool) to easily classify objects in MERLIC.

SOLOMON vision Solmotion

VisionVision-guided robot solution combines 3D-vision with machine learning

Increases manufacturing flexibility and efficiency

Solmotion is a system that automatically recognizes the product’s location and makes corrections to the robot path accordingly. The system reduces the need for fixtures and precise positioning in the manufacturing process, and can quickly identify the product’s features and changes. This helps the robot to react to any variations in the environment just as if it had eyes and a brain. The use of AI allows robots to break through the limitations of the past, providing users with high flexibility, even when dealing with previously unknown objects.

Solomon’s AI technology combines 2D and 3D vision algorithms, alternating them in different contexts. Through the use of neural networks, the robot is trained not only to see (Vision) but also to think (AI), and move (Control). This innovative technology was honored in the Gold Category at the Vision Systems Design Innovators Awards. In addition to providing a diverse and flexible vision system, Solmotion supports more than twenty world-known robot brands. This greatly reduces the time and cost of integrating or switching different robots, giving customers the ability to rapidly automate their production lines or quickly move them to a different location. This makes Solmotion a one-stop solution that provides system integrators and end-users with a full range of smart vision tools.

Through a modular and intelligent architecture, Solmotion can quickly identify product changes and make path adjustments in real-time, regardless of any modifications made to the production line. This results in a more -flexible manufacturing process while improving the production environment to become a zero mold, zero inventory smart production factory.

Solmotion Key Advantages:

Cuts mechanical tooling costs

Saves the costs of fixture and storage space

Reduces changeover time

Decreases the accumulated tolerance caused by placing position

Solmotion Key Features:

CAD/CAM software support (offline instead of off-line)

Graphical User Interface, easy for editing program logic

Automatic object recognition, and corresponding path loading

Automatic/manual point cloud data editing

User-friendly path edition/creation/modification

Project Management/Robot Program Backup

Support for more than 20 world-known robot and PLC brands

ROS automatic obstacle avoidance function

Solmotion Key Functions

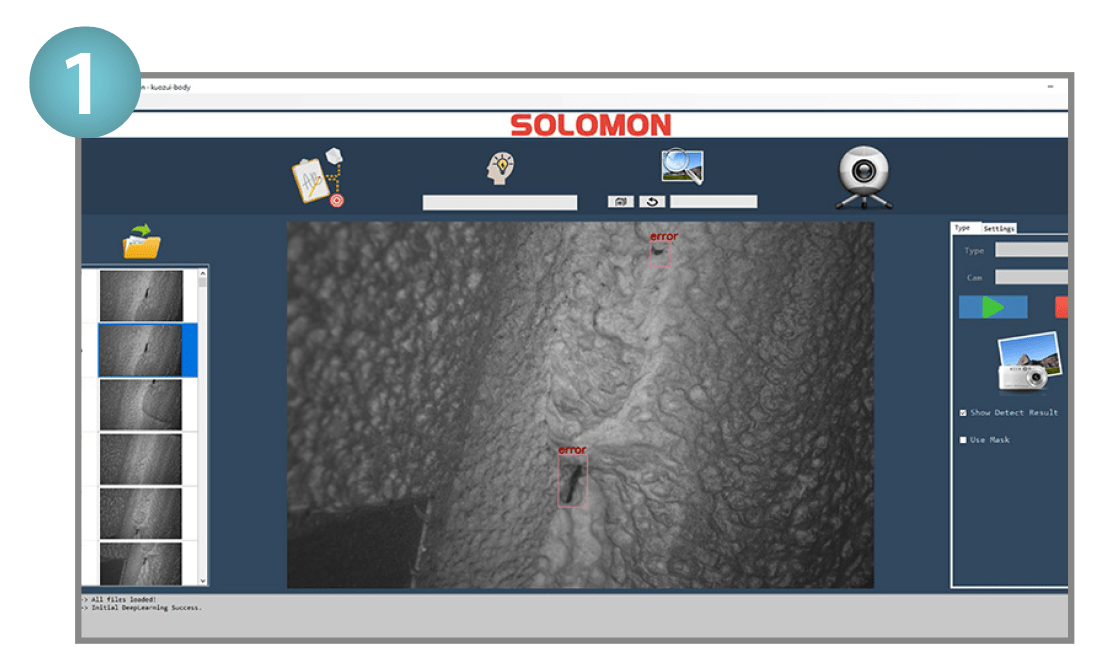

AI Deep Learning tool

Neural networks can be used to train the AI to learn to identify object features/defects on the surface of the items. Comparing it to traditional “rule-based” AOI, AI inspection application scenarios are wider, smarter, and do not require deep technical knowledge. Together with the Solmotion vision-guided robot technology, a camera mounted on a robot can perform just like human eyes and inspect each detail on the surface of the objects.

Applications :

Painting defects and welding inspection, mold repairing, metal defect inspection, and food sortation.

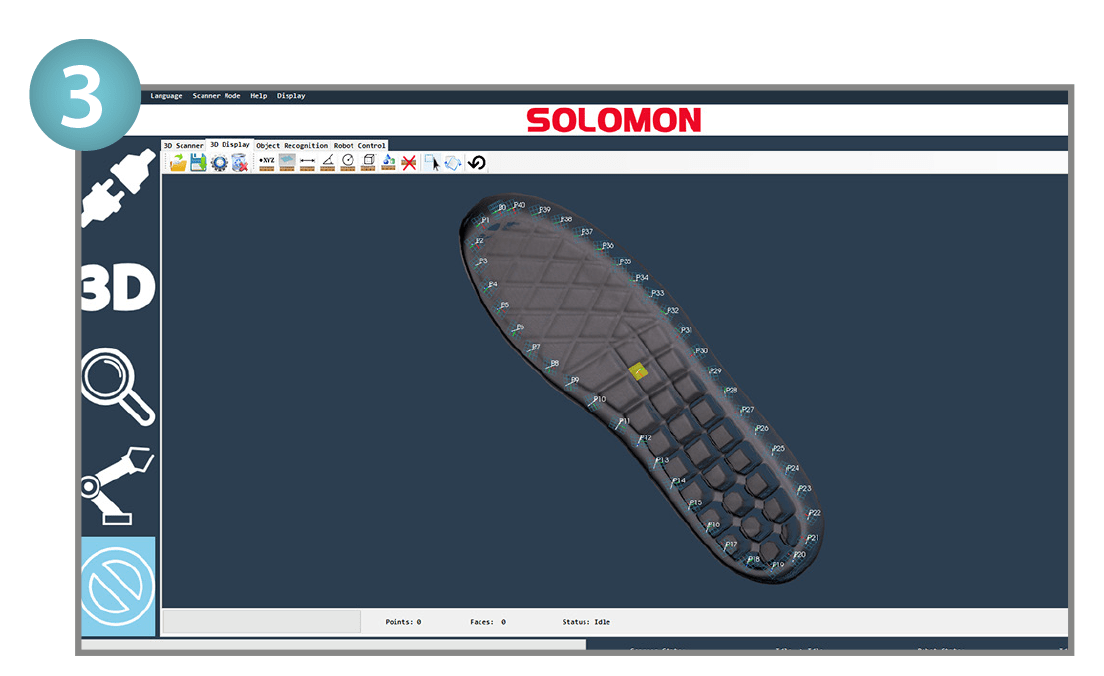

3D vision positioning system

The objects can be placed randomly without the need for precision fixtures or a positioning mechanism. Through the use of visual recognition of partial features, AI can locate the position of the parts in space, generating their displacement and rotation coordinates in real-time, which are then fed back to the robot for direct processing. Also, to achieve flexible production, the system uses a path-loading function based on an object feature recognition function. The software can also generate the robot path through off-line programming, making it a suitable solution for Hi-Mix or Low-Volume, mixed production scenarios.

Applications:

Various Robot Machine Tending Applications.

Robotic path planning auto-generation

There is no need to manually set the robot path, Solomon’s AI will learn the edge and automatically generate the path planning. The processing angle can be adjusted to “vertical” or “specified” according to the situation. The surface-filling path generating and corner path optimization functions are also available. Supporting more than 20 robot and PLC brands; our solution is suitable for products that are time-consuming, Hi-Mix or Low-Volume, and highly variable in path teaching.

Applications:

Cutting, Gluing, Edge trimming, Painting.

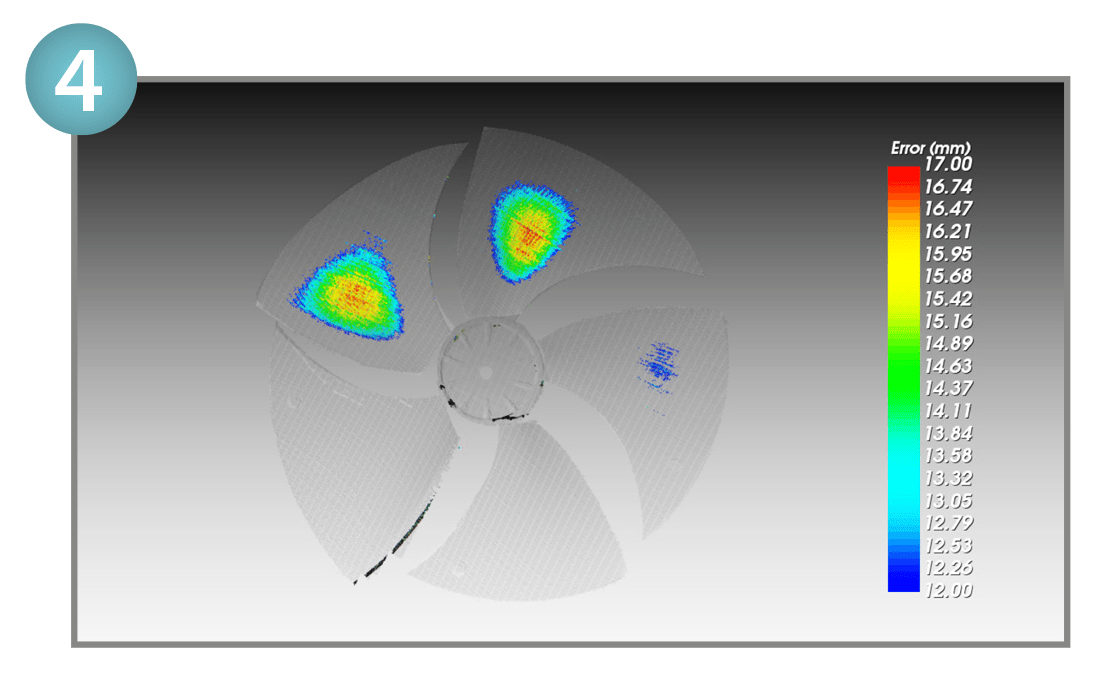

3D Matching Defect Inspection

The software will perform a comparison between the generated 3D point cloud data of the object and the standard CAD in real-time, generating a report according to the pre-set difference threshold. The report will contain the differences in height, width, and volume data. This data can also be used to automatically generate the robot path. This solution is suitable for object matching and deformation compensation applications.

Applications:

Inspection,Trimming,Repairing,Milling, and 3D Printing.

Matrox Imaging Matrox Imaging Library X

VisionMatrox® Imaging Library (MIL) X1 is a comprehensive collection of software tools for developing machine vision, image analysis, and medical imaging applications. MIL X includes tools for every step in the process, from application feasibility to prototyping, through to development and ultimately deployment.

The software development kit (SDK) features interactive software and programming functions for image capture, processing, analysis, annotation, display, and archiving. These tools are designed to enhance productivity, thereby reducing the time and effort required to bring solutions to market.

Image capture, processing, and analysis operations have the accuracy and robustness needed to tackle the most demanding applications. These operations are also carefully optimized for speed to address the severe time constraints encountered in many applications.

MIL X at a glance

- Solve applications rather than develop underlying tools by leveraging a toolkit with a more than 25-year history of reliable performance

- Tackle applications with utmost confidence using field-proven tools for analyzing, classifying, locating, measuring, reading, and verifying

- Base analysis on monochrome and color 2D images as well as 3D profiles, depth maps, and point clouds

- Harness the full power of today’s hardware through optimizations exploiting SIMD, multi-core CPU, and multi-CPU technologies

- Support platforms ranging from smart cameras to high-performance computing (HPC) clusters via a single consistent and intuitive application programming interface (API)

- Obtain live data in different ways, with support for analog, Camera Link®, CoaXPress®, DisplayPort™, GenTL, GigE Vision®, HDMI™, SDI, and USB3 Vision®2 interfaces

- Maintain flexibility and choice by way of support 64-bit Windows® and Linux® along with Intel® and Arm® processor architectures

- Leverage available programming know-how with support for C, C++, C#, CPython, and Visual Basic® languages

- Experiment, prototype, and generate program code using MIL CoPilot interactive environment

- Increase productivity and reduce development costs with Matrox Vision Academy online and on-premises training.

First released in 1993, MIL has evolved to keep pace with and foresee emerging industry requirements. It was conceived with an easy-to-use, coherent API that has stood the test of time. MIL pioneered the concept of hardware independence with the same API for different image acquisition and processing platforms. A team of dedicated, highly skilled computer scientists, mathematicians, software engineers, and physicists continue to maintain and enhance MIL.

MIL is maintained and developed using industry recognized best practices, including peer review, user involvement, and daily builds. Users are asked to evaluate and report on new tools and enhancements, which strengthens and validates releases. Ongoing MIL development is integrated and tested as a whole on a daily basis.

MIL SQA

In addition to the thorough manual testing performed prior to each release, MIL continuously undergoes automated testing during the course of its development. The automated validation suite—consisting of both systematic and random tests—verifies the accuracy, precision, robustness, and speed of image processing and analysis operations. Results, where applicable, are compared against those of previous releases to ensure that performance remains consistent. The automated validation suite runs continuously on hundreds of systems simultaneously, rapidly providing wide-ranging test coverage. The systematic tests are performed on a large database of images representing a broad sample of real-world applications.

Latest key additions and enhancements:

Simplified training for deep learning

New deep neural networks for classification and segmentation

New deep learning inference engine

Additional 3D processing operations including filters

3D blob analysis

3D shape finding

Hand-eye calibration for robot guidance

Improvements to SureDotOCR®

Makeover of CPython interface now with NumPy support

Field-proven vision tools

Image analysis and processing tools

Central to MIL X are tools for calibrating; classifying, enhancing, and transforming images; locating objects; extracting and measuring features; reading character strings; and decoding and verifying identification marks. These tools are carefully developed to provide outstanding performance and reliability, and can be used within a single computer system or distributed across several computer systems.

- Pattern recognition tools

- Shape finding tools

- Feature extraction and analysis tools

- Classification tools (using machine learning including deep learning)

- 1D and 2D measurement tools

- Color analysis tools

- Character recognition tools

- 1D and 2D code reading and verification tool

- Registration tools

- 2D calibration tool

- Image processing primitives tools

- Image compression and video encoding tool

- Tools fully optimized for speed

- 3D vision tools

- Distributed MIL X interface

MIL CoPilot interactive environment

Included with MIL X is MIL CoPilot, an interactive environment to facilitate and accelerate the evaluation and prototyping of an application. This includes configuring the settings or context of MIL X vision tools. The same environment can also initiate—and therefore shorten—the application development process through the generation of MIL X program code.

Running on 64-bit Windows, MIL CoPilot provides interactive access to MIL X processing and analysis operations via a familiar contextual ribbon menu design. It includes various utilities to study images and help determine the best analysis tools and settings for a given project. Also available are utilities to generate a custom encoded chessboard calibration target and edit images. Applied operations are recorded in an Operation List, which can be edited at any time, and can also take the form of an external script. An Object Browser keeps track of MIL X objects created during a session and gives convenient access to these at any moment. Non-image results are presented in tabular form and a table entry can be identified directly on the image. The annotation of results onto an image is also configurable. MIL CoPilot presents dedicated workspaces for training one of the supplied deep learning neural networks for Classification.These workspaces feature a simplified user interface that reveals only the functionality needed to accomplish the training task like an image label mask editor. Another specialized workspace is provided to batch-process images from an input to an output folder.

Once an operation sequence is established, it can be converted into functional program code in any language supported by MIL X. The program code can take the form of a command-line executable or dynamic link library (DLL); this can be packaged as a Visual Studio project, which in turn can be built without leaving MIL CoPilot. All work carried out in a session is saved as a workspace for future reference and sharing with colleagues.

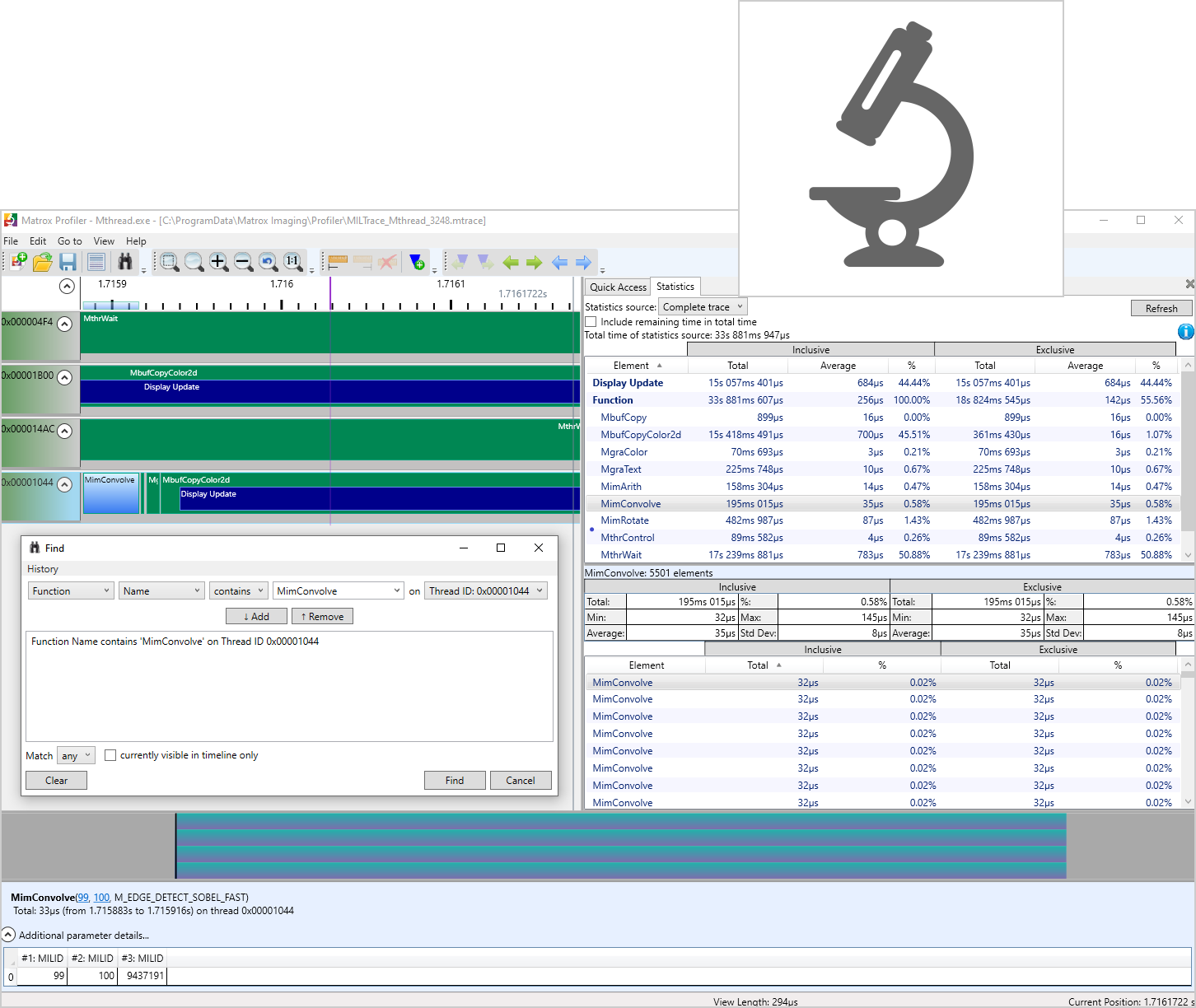

Matrox Profiler

Matrox Profiler is a Windows-based utility to post-analyze the execution of a multi-threaded application for performance bottlenecks and synchronization issues. It presents the function calls made over time per application thread on a navigable timeline. Matrox Profiler allows the searching for, and selecting of, specific function calls to see their parameters and execution times. It computes statistics on execution times and presents these on a per function basis. Matrox Profiler tracks not only MIL X functions but also suitably tagged user functions. Function tracing can be disabled altogether to safeguard the inner working of a deployed application.

Development features:

- Complete application development environment

- Portable API

- .NET development

- JIT compilation and scripting

- Simplified platform management

- Designed for multi-tasking

- Buffers and containers

- Saving and loading images

- Industrial and robot communication

- WebSocket access

- Flexible and dependable image capture

- Matrox Capture Works

- Simplified 2D image display

- Graphics, regions, and fixtures

- Native 3D display

- Application deployment

- Documentation, IDE integration, and examples

- MIL-Lite X

- Software architecture

Liebherr Group Robot vision technology packages - LHRobotics.Vision

Bin picking | VisionLiebherr is making its expertise in the field of industrial robot vision applications available to a wide user group with the LHRobotics.Vision technology packages

The technology packages consist of a projector-based vision camera system for optical data collection and software for object identification and selection, collision-free withdrawal of parts, and robot path planning to the stacking point.

Software

Basic license:suitable for customers who only need to roughly set the gripped workpiece down. Path planning is not necessarily required for this. Due to the lack of robot model and obstacles, it is also suitable for customers who place less value on collision checking outside the bin, for example in cases where the gripper is never able to fully enter the bin.

Pro license:

The professional license offers unrestricted use of the LHRobotics.Vision software. This license is particularly suitable for customers who place value on full collision checking of the path from removal to possible stacking

Smart bin-picking software

Design a complex application without any programming knowledge at all. LHRobotics.Vision makes it possible. The intuitive graphical user interface enables quick entry of all necessary information using simple steps, starting with:

- Attaching workpieces

- Configuration of transport containers

- Models can be created directly in the software for simple geometry, or existing CAD data can be imported

Step by step to the right grip

In order to be able to realistically represent withdrawal of parts including all axis movements in the software, highly detailed grippers are created – even bendable grippers or a 7th or 8th axis can be represented. The interfering contour takes into account the current status of the gripper – open or closed. Again, existing CAD data can be accessed.

When the workpiece and gripper meet, the task is to define suitable picking positions. This can be done by visually positioning the gripper or by precisely defining the coordinates. Degrees of freedom can be tested, and even realistic removal cycles can be simulated with the LHRobotics.Vision Sim plugin. This enables the optimization of the gripper already within the software.

Integrated simulation possibilities with LHRobotics.Vision Sim

If changes are necessary, for example modified or completely new parts are to be gripped, you'll want to test in advance if it works. In our option package LHRobotics.Vision Sim, this possibility is included. As offline programming, i.e. without intervening in the running cell, the feasibility can be tested. The entire process can therefore be tested in the cell and put into operation in advance.

The environment in view

Once parts and grippers have been created, the periphery follows. The robot used is selected from the library to check the work area. Any obstacles can be taken into account:

- Input of obstacles present in the robot’s work area

- Hand-eye calibration of the system between sensor and robot

- Definition of framework conditions for collision-free withdrawal of parts

- Path planning

Microsoft Microsoft Robotics Developer Studio

Simulation | Monitoring | Developer tools | VisionMicrosoft Robotics Developer Studio (MSRS) is a powerful toolkit released by Microsoft Research, designed to build service-based applications for a wide range of hardware devices. Despite its name, it is not limited to playing with robots; it offers a service-oriented runtime and visual authoring tools, making it applicable to various industries beyond robotics.

One standout feature of MSRS is its service-oriented runtime, composed of two lower-level runtimes: Decentralized Software Services (DSS) and Coordination and Concurrency Runtime (CCR). DSS is based on the REST principles, commonly used to power the Web, enabling lightweight and efficient communication between services. CCR, on the other hand, is a Microsoft .NET Framework library supporting asynchronous processing, crucial for handling continuous data flow in robotics applications.

MSRS provides visual authoring tools, tutorials, and documentation, making it beginner-friendly for developers new to robotics. Hobbyists and academic researchers can freely download and use MSRS, while commercial developers can purchase the toolkit for a small fee. The inclusion of a Visual Programming Language (VPL) tool and Visual Simulation Environment (VSE) further simplifies the development process, making it accessible to a wide range of users interested in creating robotics-based applications.

EasyODM.tech EasyODM Machine Vision Software

Vision

PEKATVISION Software Bundled with Camera

VisionPEKAT VISION© is software for industrial visual inspection and quality assurance. Together with a smart camera it is a perfect solution for those who would like a ready-to-use AI solution running inside a camera.

For all the companies that would like to enjoy all possibilities that our software brings and cannot work with computers, there is an option to obtain our software bundled with a camera. It is an alternative to computers – all the advantages of PEKAT VISION in one small device.

Smart Camera Solution

PEKAT VISION and ADLINK Smart Camera offer a compact solution for defect detection. The only thing you need to improve the quality control of your products is a small camera which is perfectly able to run PEKAT software.

AI Industrial Smart Camera

Integrated NVIDIA Jetson

IP-67 Certified

USB Type C for video, power and connectivity